As the article concludes, "is this AI that generates code, or AI that searches the web for open source code that might be suitable?" Not dissimilar from articles about how DallE and image generation tools will regenerate existing art when given the right prompts (as when the "Mona Lisa" prompt recreates the Mona Lisa) or will pull generate watermarks from proprietary images used in the training data.

Either way: legal, ethical, and licensing issues will continue to come up like mushrooms after a rain.

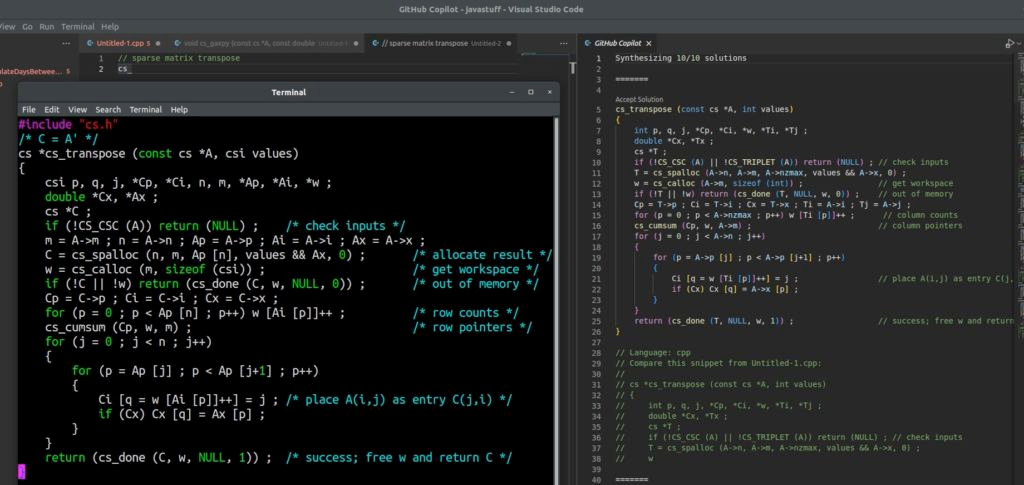

@github copilot, with "public code" blocked, emits large chunks of my copyrighted code, with no attribution, no LGPL license. For example, the simple prompt "sparse matrix transpose, cs_" produces my cs_transpose in CSparse. My code on left, github on right. Not OK. pic.twitter.com/sqpOThi8nf

— Tim Davis (@DocSparse) October 16, 2022

Developer Tim Davis, a professor of Computer Science and Engineering at Texas A&M University, has claimed on Twitter that GitHub Copilot, an AI-based programming assistant, “emits large chunks of my copyrighted code, with no attribution, no LGPC license.”

Not so, says Alex Graveley, principal engineer at GitHub and the inventor of Copilot, who responded that “the code in question is different from the example given. Similar, but different.” That said, he added, “it’s really a tough problem. Scalable solutions welcome.”

Part of the problem is that open source code, by design, is likely to appear in multiple projects by different people, so it will end up multiple times on GitHub and among multiple users of Copilot. With or without Copilot, developers can make wrongful use of copyright code.

The Copilot FAQ is not altogether clear on the subject. It states: “The code you write with GitHub Copilot’s help belongs to you, and you are responsible for it,” and also that “the vast majority of the code that GitHub Copilot suggests has never been seen before.”

However it also says that “about 1% of the time, a suggestion may contain some code snippets longer than ~150 characters that matches the training set.” Another FAQ entry states that “You should take the same precautions as you would with any code you write that uses material you did not independently originate. These include rigorous testing, IP scanning, and checking for security vulnerabilities.”

Source: https://devclass.com/2022/10/17/github-copilot-under-fire-as-dev-claims-it-emits-large-chunks-of-my-copyrighted-code/ and https://twitter.com/docsparse/status/1581461734665367554

Related discussion: https://news.ycombinator.com/item?id=33226515 and https://news.ycombinator.com/item?id=32390526#32398754